Testing embedding Power BI

This is the first test:

Put the link here

Rachael's Thoughts

I am a BI and Analytics professional trying to learn something new every day. I am also a wife, mother and community member in the Greater Boston area, all of these are other areas where I learn something new all the time. For those who will read old posts, my original plan was to document a series of hikes with my family - especially with my daughter. We never did all of the 4,000 footers in NH, but we've had many wonderful hikes since I originally setup this blog 8 years ago.

Sunday, March 22, 2020

Tuesday, April 4, 2017

Moving to SEI

In Motion!

It's a happy announcement: I joined SEI's Boston Group this week to become part of this strong local consulting firm and its fabulous culture that operates in a variety of industries and areas of specialization with IT.

It wasn't easy to leave my friends and respected colleagues at Pragmatic Works, and I look forward to working with them again in the future.

Here on my blog I will continue to share what I learn. I fully expect to be learning at least as fast this year than I did last, so hold on here we go!

If you are curious about what I like about SEI, you should see the new website they've rolled out just in time for my arrival! sei.com

If you are looking for any IT assistance, please check out our capabilities.

Friday, March 24, 2017

Power BI Gotchas

Answers to some common questions and frustrations for new users of Power BI.

I'd like to help

save you some time banging your head and searching the internet by pointing out

some of the issues I've run into when working with clients over the last year.

Data loading and preparation:

There are many

articles and blogs about the technical aspects of loading data into Power BI so

I won't cover that here in great detail. However, I would like to call out some

basic data transformations and cleansing activities that can make a big

difference to the experience of report users.

Once you have

loaded a table check the columns:

1.

Rename your data columns to use business

terminology in the field names.

This isn't a

database, this is a reporting data model and your business users need to be

able to understand the names of the columns.

2.

Add units to your column names.

Again, the

objective here is to make the reports readable and easy to interpret. You don't

want your uses to wonder if you were summing minutes or seconds in the

reporting graphic.

3.

Remove unnecessary columns:

Will the column be

used for reporting? Really think about this, will the users of your reports

NEED to see this column, slice or filter by it? If not, it doesn't belong in

your reporting data model.

4.

Remove bad data, or unnecessary data records:

Will the RECORDS in

your table all be reported on? Again, every record might not have valid data.

Check for this and remove records that are not going to provide good insight in

your reporting.

5.

Remove unused records, even from date tables!

Are the records

part of the data set you need? Sometimes we load records that go all the way

back years before our reporting range. Unless those records contribute to the

value of information shown in the report, remove them. This is ESPECIALLY true

of the Date or Calendar tables - think about those extra records. This is much

more data than you need to be filtering through for your reports and interactive

visualizations.

6.

Use data types wisely:

Change data types

to be accurate for your business definition of the column. Sometimes colums will come in as whole numbers to Power BI because the sample used to define the

column "sees" only numbers in the first 1,000 rows for the preview.

If this is a text based column, your data load will error when you load the

rest of the data.

7.

Use Trim if you think there might be leading or

training spaces in your data.

Once you have

loaded the data there is still preparation to complete. A common question often

comes up right about now though, so let's talk about the

Confusion between Calculations and Measures:

These are similar:

that the engine will calculate them for you, and both can be aggregated in your

reports and visualizations. The key difference is that calculations are

values that are stored in each row of your table, and measures are not

calculated until used in the report context.

1.

Creating Calculations and Measures

Format your facts

as part of your data model setup:

Often you will be

told to use custom calculations instead of the default summarization on your

columns.

1.

You will then know, and be able to define in a

data dictionary, exactly how the calculation was built.

2.

However, if you do use default summarization,

there will be a benefit when viewing reports: you will be able to use the

"View records" command to see what records were included in the

aggregate. This does not work for measures.

2.

Sometimes you need to create a new column as part

of each row:

For example, you

might want to calculate Amount as a Quantity * Price - that is a calculated

column

1.

The "context" of the calculation is the

row - always

2.

Created on data load and stored just like other

columns loaded by Power Query

3.

Sometimes you want to show calculation results

that change as we interact with our reports:

You might need to

know the maximum Amount which is max(Quantity * Price), but when reviewed

across all rows for orders placed within the current Year it will give a

different answer than when viewed for all orders within a given City.

1.

In this case the "context" is all of

the rows that are being evaluated, and which rows will be determined by

"where" the measure is being used

i.

Used as a value in a chart by dates, it might be

the max Amount per Year, then you might drill down to max by Quarter and max by

Month

ii.

Used as a value in a Geographic map, it might be

the max Amount in the Region, then drill down to a given State or Province,

City, etc…

Some other important and sometimes confusing features:

1.

Go through and format your facts as part of your

data model setup

i.

Set default summarization.

ii.

Set the format to use for showing the fields

It is easier to do

all of these steps for all of your columns at one time so that your reports

look great from the start and you will know that the standard has been

implemented. It is still likely that you will need to return to update or fix

some which you can do a t any time.

2.

Create Groups:

You can categorize

your records automatically by creating groups that become a new column in your

table. "Other" as a category allows for new data values added in your

incoming data. Be sure to check overtime that you don't need to add a new

category though.

3.

Create Hierarchies:

i.

Creating a hierarchy is something people do accidentally

all of the time, then they worry about damaging their data model by deleting

them. Don't worry if you get an accidental hierarchy with an unintended

drag/drop of a column in the Fields list, just go ahead and delete that

hierarchy - your fields will stay as they were.

ii.

To create an intentional hierarchy drag a lower

level field over to the highest level in the hierarchy and drop it there. There

will be a yellow dotted line shown where it will drop. Each other column added

to the "pile" will be added at the bottom.

iii.

If necessary rearrange them by dragging them up

or down, or delete one using the "x" to the far right.

iv.

Adding a Hierarchy to the axis of a visualization

will enable drop down.

v.

In the axis of a graph - you can remove levels of

hierarchy that are not desired for that graph without any effect on the

hierarchy.

4.

Even if you set the format of your column, you

may need to set the visual scale on each individual visualization settings:

None, Thousands. Pay attention to this, it makes a big difference to

readability

5.

You can now "See Data" AND "See

Records"

i.

See Data will show the values that are in the

visualization - as a table.

ii.

See Records will show the table records that

comprise the aggregations that are shown in the graph. Every record in it's

entirety.

iii.

As mentioned above, "See Records" only

works with data elements (including calculated columns?) not with measures that

can be summarized

iv.

Trick - if you want a count as your visual value,

but your data doesn't have a uniquely identifying column to "count",

create a column that can be summarized for the counting (like

"Trips") and default it to the value of 1. You can then sum or count

the values in this column to know how many unique "trips".

6.

If you create a visualization (a bar chart) and

the values are the same in every data point you have probably forgotten to

create your relationships. You can draw them on the Relationships tab, or us

the Manage Relationships dialog box from the Home ribbon bar.

7.

How to review your relationships:

If you have many

tables and many relationships and want to review them all quickly, the dialog

box from Manage Relationships may be the better choice, as it is quickly

scanned for errors in column names, etc.…

8.

Single and "Both" direction filtering

make a big difference in some reports:

i.

Single direction filtering enables the value from

one slicer or visual to affect the values on the related table. However,

filtering the second table by another value will not affect the values shown

from the slicer.

ii.

The best way to visualize this is to see 2

slicers from two dimensional tables and when the dimensions are both single

direction, the two slicers will not affect each other.

iii.

If the 2 dimensions are "Both"

direction filtering, then selecting a value from one will affect the values

shown from the other.

9.

Drill down in charts

i.

There are 3 drill down buttons on a chart that

contains hierarchies:

ii.

The single down arrow button is a toggle to turn

drill down on, when on selecting a value in the chart will "drill

down" to the next level.

iii.

The double arrow will drill down to the next

level, aggregating at that level ONLY:

i.

Ex: if you go from showing 3 years of data down

to showing months, the values for each month will be the aggregate of all of

the month's values for every year, all together

iv.

The forked arrow aggregates at the combination

of the level above and the new level:

i.

Ex: if you go from showing 3 years down to

months, you will see the values for each month of every year.

10.

A Date table enables time calculations if certain

criteria are met:

i.

A date column must have a date data type

ii.

The date column must show a continuous value for

every date in the time period (ex: 1/1/2017, 1/2/2017, …)

iii.

For any time intelligence calculations to work:

i.

Joins with the date table must be made on the

date column - NOT on a surrogate key.

ii.

The values in the time calculation must

also be continuous. If you are aggregating ParallelPeriod values, you must be

doing it across a dataset that has a value for every date in the time period.

11.

Why isn't my calculation working?

i.

Check your calc - of course, but if you don't

find anything

ii.

Save some time by reviewing your:

i.

Page and Report filters

ii.

Visualization filters

iii.

Whether you have Relationship filtering in Single

or Both directions

These are some of

the "gotchas" that can waste your time when you create your reports

and can cause your users confusion when they use your reports.

Are there others

that you've spent time resolving that you'd like to share?

Monday, December 12, 2016

Reading SAS files from Power BI using R Script

Recently I have been working to read SAS files (sas7bdat file format) in Power BI. SAS technical support and the Power BI community team have both worked with me to attempt to use the SAS Local Provider for OLE DB which is the only provider that does not require a SAS server.

We were able to use the SAS Local Data Provider 9.44 in Excel by using the Data Connection Wizard to access Data Link Properties and to read the sas7bdat data into Excel as a data source (see details on this at the bottom of this post).

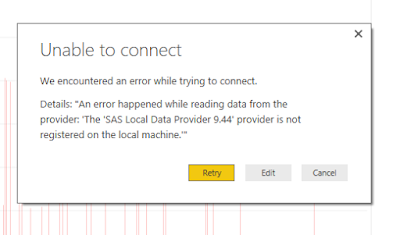

However, following similar steps in Power BI lead to an error message because "the SAS Local Provider for OLE DB does not 'like' the GetOleDbSchemaTable method. (quote from SAS Technical support):

Now, this error seems actionable until you remember that we just used the provider to connect from Excel. This is due to the use of the GetOleDbSchemaTable method and we could not find a way to work around this problem in Power BI.

I have submitted this as a support ticket to Microsof and I look forward to their response on how to get a supported connection to SAS files.

The next step is to find another way:

We were able to use the SAS Local Data Provider 9.44 in Excel by using the Data Connection Wizard to access Data Link Properties and to read the sas7bdat data into Excel as a data source (see details on this at the bottom of this post).

However, following similar steps in Power BI lead to an error message because "the SAS Local Provider for OLE DB does not 'like' the GetOleDbSchemaTable method. (quote from SAS Technical support):

|

| Error Message Text of error: "The 'SAS Local Data Provider 9.44' provider is not registered on the local machine." |

I have submitted this as a support ticket to Microsof and I look forward to their response on how to get a supported connection to SAS files.

The next step is to find another way:

Loading

Data into the Dashboard file using R Script:

The best method I have found so far to read SAS files into Power BI is using R script:

https://businessintelligist.com/2016/09/09/reading-sas-sas7bdat-files-in-power-bi/

1) Locate your SAS Data set files (you can download a sample set in ZIP format from: http://www.principlesofeconometrics.com/sas.htm)

2) Install R locally (I have been using R 3.3.2 from CRAN (https://cran.r-project.org/bin/windows/base/)

3) Install Power BI Desktop locally:

The best method I have found so far to read SAS files into Power BI is using R script:

https://businessintelligist.com/2016/09/09/reading-sas-sas7bdat-files-in-power-bi/

1) Locate your SAS Data set files (you can download a sample set in ZIP format from: http://www.principlesofeconometrics.com/sas.htm)

2) Install R locally (I have been using R 3.3.2 from CRAN (https://cran.r-project.org/bin/windows/base/)

3) Install Power BI Desktop locally:

|

| Download Power BI Desktop from http://app.powerbi.com |

4) Open Power BI, and first, set your version of R in Options and Settings:

a. Click

the “x” in the upper right corner of the splash dialog box to close it:

|

| Close the Power BI Desktop dialog box |

c. You should be able to select your installed R version from the Detected R home directories list and Detected R IDEs

5) Now, setup the data source for your Power BI solution:

a. Drop

down Get Data and select More… -> Other –> R Script, then the Connect

button

b. The

Execute R Script dialog box provides an input location for the following script

which will set the working directory, access the library and read the file into Power BI:

c. Click

OK to see the content of the SAS file, select the table(s) to load (multiple table files haven’t been tested by

me using R Script)

d. Select

to Load the data directly, or Edit to use the Query Editor to filter, clean,

reformat or otherwise transform the data for this and all future data loads.

6)

Click Close & Apply when the data set is

complete and you are ready to use it in Power BI

Using a faster library:

I have found some information that haven is

a faster library than sas7bdat, the sample files for SAS that I have access to are not sufficient to test this, but the haven library works very well:

Using haven the script changes to reference

the haven library (https://cran.r-project.org/package=haven)

and to use the read_sas function to read the SAS file from that library as you

can see in this sample to read another data file, USA:

I am very interested to know what the

results are using production size and complex datasets, and am willing to

help in anyway that I can if you have questions about using this method.

Publishing

and Refresh of data:

The Power BI solution can be published to http://app.Powerbi.com using the Publish

button. The R Script has imported the dataset, so that will be published to the

Power BI Service along with the reports.

R Script as a data source does not yet support

refresh. There is a setting for refreshing data from Files, but that does not

work for R Script as a source, it is for CSV, etc…

Rachael

Tuesday, October 11, 2016

Azure Cloud Based Analytics

What are the benefits of moving our analytics to the cloud? (link to presentation slides)

The elements of analytics are the same whether on premises or in the cloud. Cloud based tools for analytics can provide a platform that is far more extensible, economical and powerful than traditional on-premise. This session will review some cloud analytics use cases and how the tools from Microsoft combine to create business insight and value.

Azure Cloud Tools

Microsoft Intelligence (edited, previously Cortana Intelligence Suite) is a powerful combination of cloud based tools that manage data, provide analysis capability and store or visualize results. Together these tools represent the six elements of data analytics.

Microsoft Intelligence (edited, previously Cortana Intelligence Suite) is a powerful combination of cloud based tools that manage data, provide analysis capability and store or visualize results. Together these tools represent the six elements of data analytics.

The talk on Data Based analytics includes a demonstration of Data Lake, PolyBase, Azure SQL Data Warehouse, Power BI, and Azure Machine Learning. The links to Power BI and Azure ML will take you to the apps, each has a free account you can sign up and use for testing. I encourage you to sign up for the free offer for $200 in Azure Credits (United States based offer, at the time of this posting Oct. 11, 2016).

Analytics projects

According to Gartner analytics projects include 6 elements and there are common challenges for each of these elements that must be addressed in any analytics project:

1. Data sources - volume alone and the need to move large data sets

2. Data models - complexity of setup as they simulate business processes

3. Processing applications - data cleansing, remediation of bad data

4. Computing power - analysis requires considerable power

5. Analytics models - design of experiments and management of the models, model complexity

6. Sharing and storage of results - informative visualizations and results embedded in applications

Tools provided by cloud vendors provide assistance and in some cases, with far superior solutions to these issues.

The Cloud analytics tools are designed to address the challenges we’ve been discussing:

1) Scalability: Agility/ Flexibility

-large data sources –power them off when not in use

- heavy computing capability – pay only for what you use

- Stand up a new environment – pilot a new capability

- integrate data sources with multiple visualization and analytics environment

2) Economy –

- less expensive to stand up than hardware and software,

- fewer skills necessary in house for implementation, configuration, integration, use,

- management and maintenance of systems

3) Security

- Azure is designed for security from the ground up

- Microsoft spends $1B a year on security research – more than most security firms

- identity based security throughout the stack

- protection for disaster recovery, regulatory specific platforms to address security needs

4) Capability

- Integration with desktop tools for embedding visualizations, integrating predictive analytics

- Consumer technology style interfaces enable faster learning and require fewer skills than script based on premises tools

- Collaboration enabled by ties across Office 365 tools and Mobile capabilities

- Powerful servers can simply process far more data and analysis than most local servers

According to Gartner analytics projects include 6 elements and there are common challenges for each of these elements that must be addressed in any analytics project:

1. Data sources - volume alone and the need to move large data sets

2. Data models - complexity of setup as they simulate business processes

3. Processing applications - data cleansing, remediation of bad data

4. Computing power - analysis requires considerable power

5. Analytics models - design of experiments and management of the models, model complexity

6. Sharing and storage of results - informative visualizations and results embedded in applications

Tools provided by cloud vendors provide assistance and in some cases, with far superior solutions to these issues.

The Cloud analytics tools are designed to address the challenges we’ve been discussing:

1) Scalability: Agility/ Flexibility

-large data sources –power them off when not in use

- heavy computing capability – pay only for what you use

- Stand up a new environment – pilot a new capability

- integrate data sources with multiple visualization and analytics environment

2) Economy –

- less expensive to stand up than hardware and software,

- fewer skills necessary in house for implementation, configuration, integration, use,

- management and maintenance of systems

3) Security

- Azure is designed for security from the ground up

- Microsoft spends $1B a year on security research – more than most security firms

- identity based security throughout the stack

- protection for disaster recovery, regulatory specific platforms to address security needs

4) Capability

- Integration with desktop tools for embedding visualizations, integrating predictive analytics

- Consumer technology style interfaces enable faster learning and require fewer skills than script based on premises tools

- Collaboration enabled by ties across Office 365 tools and Mobile capabilities

- Powerful servers can simply process far more data and analysis than most local servers

How can my business benefit from analytics in the cloud?

Data, provided and used as a valuable asset of the firm provides leverage employees apply to problem solving activities. Fact based trend prediction leads to insights that provide business value.

Businesses taking advantage of the cloud to benefit from increased cloud computing power, the ability to handle large data sets and for the mobility provided by access to the analytics platform from any location.

Data, provided and used as a valuable asset of the firm provides leverage employees apply to problem solving activities. Fact based trend prediction leads to insights that provide business value.

Businesses taking advantage of the cloud to benefit from increased cloud computing power, the ability to handle large data sets and for the mobility provided by access to the analytics platform from any location.

Other articles that may help to answer this question are:

Power BI is a great starting point for moving analytics to the cloud

Power BI brings many customers their first exposure to cloud analytics. Companies find multiple benefits of collaboration between users, mobile access, and the experience of adopting a new way to use data. Workspaces provide secure areas to work together on Power BI solutions and are linked to Exchange groups and OneDrive folders where other collateral can be shared.

Storage in the cloud

Using Azure cloud based storage is relatively inexpensive, remotely accessible from anywhere, secure and in coming data is free (data moving out of your region is charged). Large data sets can be managed in Azure Blob Storage, Azure Data Lake, Azure SQL DW and Azure SQL Database.

Predictive Analytics with Azure Machine Learning

Azure ML provides a friendly tool for building machine learning experiments, cleaning and visualizing data. The environment provides familiar analytic tools for R and Python scripting, built in models for statistical analysis and a process for encapsulating trained models in web services as predictive experiments. Integration with Excel and other applications is simplified by the web service interface.

Questions:

Q1) In the PolyBase example does modeling the data by casting columns hinder performance of the data load in the "Create Table As" (CTAS) statement?

(Original posting left this question unanswered, edited to add the answer below)

In creating the External Table the columns must be of the same data type.

However, when querying the external table to use CTAS to populate a SQL DW table I found that using cast to change the datatype of the column was faster to complete the new table creation. This was true for two cases, one where I converted in the input column varchar2(20) to an Int and another where I converted the input varchar(100) to a Date data type. I suspect this is due to the increased speed of input for Int and Date data types over varchars, but it indicates that the original query time isn't different.

In creating the External Table the columns must be of the same data type.

However, when querying the external table to use CTAS to populate a SQL DW table I found that using cast to change the datatype of the column was faster to complete the new table creation. This was true for two cases, one where I converted in the input column varchar2(20) to an Int and another where I converted the input varchar(100) to a Date data type. I suspect this is due to the increased speed of input for Int and Date data types over varchars, but it indicates that the original query time isn't different.

Q2) Is it simple to switch from cold to hot in Azure Blob Storage?

In regions where these settings have been enabled (not East yet), they can be changed in the Configuration blade of the Storage Account.

Q3) When to use Data Lake in comparison to Blob Storage.

In the demo for this talk I read the NYC 2013 Taxi data from the 12 - 2+GB CSV files into Azure SQL DW using CTAS. At this time, Data Lake is still in preview, and we cannot yet use CTAS with Azure Data Lake. This forced me to use Azure Blob Storage.

Additoinal differences are that Data Lake is optimized for parallel analytics workloads and for high throughput and IOPS. Where Blob Storage is not optimized for analytics but is designed to hold any type of file. Data Lake will hold unlimited data and Blob Storage is limited to 500TB. There are cost differences too, I recommend the Azure Pricing Calculator.

I recommend the Microsoft article Comparing Azure Data Lake Store and Azure Blob Storage for details.

Wednesday, September 28, 2016

Performance Tips and Techniques for Power BI and Power Query (update)

Power BI Performance Tips and Techniques update:

Back in March of this year I created an article and a webinar for the Pragmatic Works Blog.The original concepts still hold, Power BI and Excel Power Query are very powerful tools for building data models and sharing data discovery and visualizations. As you move from initial prototyping to a more mature model you may find that performance can be bogged down if you don't manage the structure of your reporting information. This talk provides Tips and Techniques to manage this information (link to updated slides).

Since the original webinar I have presented this topic at a few user groups and SQL Saturdays and was asked some very good questions.

I'd like to answer these questions here, and to update the slide deck as well.

If the join is done in the data load, the data model contains only one table, as in the example discussed in the original presentation.

If there is a relationship created between two tables loaded in to memory, the joined tables are both shown in the pivot tables that result from the macro, and how much memory taken by each column is shown as a datatype and total for the column.

2) In NYC at the Power BI User Group a member asked: Does the HierarchySlicer cause performance problems?

To test this, I used a data table with nearly 14M rows linked to a Date table. Power BI connected via direct query to SQL Server 2016.

The Hierarchy Slicer (HierarchySlicer.0.7.9.pbiviz) at Year / Month on the Date table compared to a "flat" YearMonthName non hierarchical slicer. In this case, each new slicer selection took about 2 seconds to repopulate the summary table to the left. The two took the same amount of time, I did not see a difference in performance.

3) At SQL Saturday in Dallas this question came up: Is the SSAS formula engine still single threaded in Power BI on the portal as it is on the desktop? The SSAS engine is the same in Power BI on app.powerBI.com as it is on your local drive. There would not be an improvement on calculations caused by multi-threading, although the local machine may or may not have more resources to dedicate to the formula engine thread.

Thank you all for the great questions, please comment on others questions or if you have more information!

Friday, July 8, 2016

World Class Data Organizations and SQL Server 2016

World Class Data Organizations (WCDO) intentionally invest in and build their data cultures to create business value. These businesses integrate data into daily activities beyond report or dashboard reviews. Employees know what data elements represent, how to access them, and how to use them to create new insights.

WCDO and SQL Server 2016 (pdf) presents the primary characteristics and activities of WCDO's and expands on some of the features of SQL Server 2016 designed to enable those activities.

This is the slide deck presented on June 30, 2016, and the beginning of a series of presentations I will deliver on this topic.

Labels:

Best Practices,

BI,

data,

SQL Server,

World Class Data Organizations

Subscribe to:

Posts (Atom)