Recently I have been working to read SAS files (sas7bdat file format) in Power BI. SAS technical support and the Power BI community team have both worked with me to attempt to use the SAS Local Provider for OLE DB which is the only provider that does not require a SAS server.

We were able to use the SAS Local Data Provider 9.44 in Excel by using the Data Connection Wizard to access Data Link Properties and to read the sas7bdat data into Excel as a data source (see details on this at the bottom of this post).

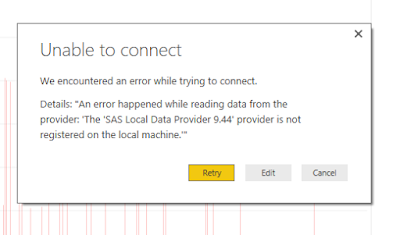

However, following similar steps in Power BI lead to an error message because "the SAS Local Provider for OLE DB does not 'like' the GetOleDbSchemaTable method. (quote from SAS Technical support):

Now, this error seems actionable until you remember that we just used the provider to connect from Excel. This is due to the use of the GetOleDbSchemaTable method and we could not find a way to work around this problem in Power BI.

I have submitted this as a support ticket to Microsof and I look forward to their response on how to get a supported connection to SAS files.

The next step is to find another way:

We were able to use the SAS Local Data Provider 9.44 in Excel by using the Data Connection Wizard to access Data Link Properties and to read the sas7bdat data into Excel as a data source (see details on this at the bottom of this post).

However, following similar steps in Power BI lead to an error message because "the SAS Local Provider for OLE DB does not 'like' the GetOleDbSchemaTable method. (quote from SAS Technical support):

|

| Error Message Text of error: "The 'SAS Local Data Provider 9.44' provider is not registered on the local machine." |

I have submitted this as a support ticket to Microsof and I look forward to their response on how to get a supported connection to SAS files.

The next step is to find another way:

Loading

Data into the Dashboard file using R Script:

The best method I have found so far to read SAS files into Power BI is using R script:

https://businessintelligist.com/2016/09/09/reading-sas-sas7bdat-files-in-power-bi/

1) Locate your SAS Data set files (you can download a sample set in ZIP format from: http://www.principlesofeconometrics.com/sas.htm)

2) Install R locally (I have been using R 3.3.2 from CRAN (https://cran.r-project.org/bin/windows/base/)

3) Install Power BI Desktop locally:

The best method I have found so far to read SAS files into Power BI is using R script:

https://businessintelligist.com/2016/09/09/reading-sas-sas7bdat-files-in-power-bi/

1) Locate your SAS Data set files (you can download a sample set in ZIP format from: http://www.principlesofeconometrics.com/sas.htm)

2) Install R locally (I have been using R 3.3.2 from CRAN (https://cran.r-project.org/bin/windows/base/)

3) Install Power BI Desktop locally:

|

| Download Power BI Desktop from http://app.powerbi.com |

4) Open Power BI, and first, set your version of R in Options and Settings:

a. Click

the “x” in the upper right corner of the splash dialog box to close it:

|

| Close the Power BI Desktop dialog box |

c. You should be able to select your installed R version from the Detected R home directories list and Detected R IDEs

5) Now, setup the data source for your Power BI solution:

a. Drop

down Get Data and select More… -> Other –> R Script, then the Connect

button

b. The

Execute R Script dialog box provides an input location for the following script

which will set the working directory, access the library and read the file into Power BI:

c. Click

OK to see the content of the SAS file, select the table(s) to load (multiple table files haven’t been tested by

me using R Script)

d. Select

to Load the data directly, or Edit to use the Query Editor to filter, clean,

reformat or otherwise transform the data for this and all future data loads.

6)

Click Close & Apply when the data set is

complete and you are ready to use it in Power BI

Using a faster library:

I have found some information that haven is

a faster library than sas7bdat, the sample files for SAS that I have access to are not sufficient to test this, but the haven library works very well:

Using haven the script changes to reference

the haven library (https://cran.r-project.org/package=haven)

and to use the read_sas function to read the SAS file from that library as you

can see in this sample to read another data file, USA:

I am very interested to know what the

results are using production size and complex datasets, and am willing to

help in anyway that I can if you have questions about using this method.

Publishing

and Refresh of data:

The Power BI solution can be published to http://app.Powerbi.com using the Publish

button. The R Script has imported the dataset, so that will be published to the

Power BI Service along with the reports.

R Script as a data source does not yet support

refresh. There is a setting for refreshing data from Files, but that does not

work for R Script as a source, it is for CSV, etc…

Rachael